Published

2025. 10. 10.

Author

ZAKE SHIM

속성의 합성(Synthesis of properties)

속성의 합성(Synthesis of properties)

Synthesis of Properties

/ Definition by zake shim

I'll explain Attribute Synthesis, the most important prerequisite for using Midjourney as a creator's tool.

While it's difficult to say for certain that this is how Midjourney officially operates,

it's a concept I'd like to introduce to my readers, so much so that I could say I started writing this article just to define it.

0. Overview

"Synthesis of properties" refers to the process by which various properties within a prompt are separated and combined to form a visual representation.

- The AI interprets the structure, context, and meaning of the words and sentences entered by the user in the prompt.

- The CLIP model extracts visual property information based on the similarity between the text and the image.

- The diffusion model gradually transforms the image from noise to a detailed image.

1. Text Recognition: Natural Language Processing (NLP)

Generative AI first analyzes the text prompt entered by the user through a natural language processing (NLP) model, analyzing the structure, context, and meaning of each word and sentence in the text.

During this process, the NLP model analyzes the following elements:

Word Meaning: It determines what concept or object a word represents. For example, "sunset" is recognized as "해질 녁" or "노을."

Sentence Structure: It understands how sentences are structured and the relationships between words. This involves identifying the position and role of adjectives, nouns, and verbs within a sentence.

For example, in the sentence "a beautiful sunset over the ocean," "beautiful" is interpreted as an adjective modifying "sunset," reinforcing the noun.

Contextual meaning: Inferring more complex meanings through the combination of words.

For example, in the expression "cyberpunk city," the combination of the style "cyberpunk" and the location "city" infers a unique image style.

2. Inference: Translating meaning and attributes between words

Once text analysis is complete,

the CLIP model translates the meanings identified from the words and sentences into attributes for image generation.

Pre-trained data plays a crucial role in this process.

Generative AI is trained on a vast database of image-text pairs, which allows it to learn which visual characteristics specific text expressions are likely to have. For example, the word "mountain" is associated with numerous mountain images it has learned, and it memorizes the general form and characteristics of that word. This is possible.

Based on this, AI infers associated image features (e.g., color, lighting, style, etc.) and converts them into visually expressible concepts (inference).

3. Image Generation: Neural Network-Based Conversion

The AI begins the image generation process based on information extracted from the text prompt.

The core technology used here is a deep learning model, typically a GAN (Generative Adversarial Network), VQ-VAE (Vector Quantization Autoencoder), or CLIP (Contrastive Language-Image Pretraining).

CLIP is a model that learns the association between text and images,

and can evaluate the semantic similarity between text and images.

Through this, it extracts image features that best fit the given text.

The diffusion (image generation) model, based on the image features extracted from CLIP,

generates specific details and styles of the image appropriate for the text prompt.

Midjourney utilizes a combination of the CLIP model and the diffusion model.

4. Attribute Combination and Interaction Understanding

When a prompt contains multiple attributes (e.g., "a futuristic city with neon lights"),

AI understands each attribute separately and combines them to create a single image.

Prioritization between attributes:

For example, in the sentence "futuristic city,"

"futuristic" is applied as an adjective modifying a core word like "city."

If the word "mega" is added between "futuristic" and "city," the AI will interpret the user's prompt as a "futuristic megacity" and infer and generate an image of a futuristic city with larger or more complex details.

Attribute association:

For example, when the expressions "neon lights" and "futuristic city" are used together,

AI understands from training data that "futuristic" and "neon lights" are commonly associated with visual styles, and reflects this in the image.

5. Limitations and Solutions for Attribute Synthesis

The AI may oversimplify the interrelationships between prompt wordings or

may misrepresent specific contexts. Contextual overinterpretation of style can occur.

Users must manually review the inferred image and apply iteratively by bypassing or replacing the words used to ensure the AI generates the image that best suits their purpose.

As prompts become more complex or longer, wording interrelationships and the AI's multimodal inferences change. Therefore, refining (organizing/summarizing/editing) and iteratively applying the prompts until the desired results are achieved.

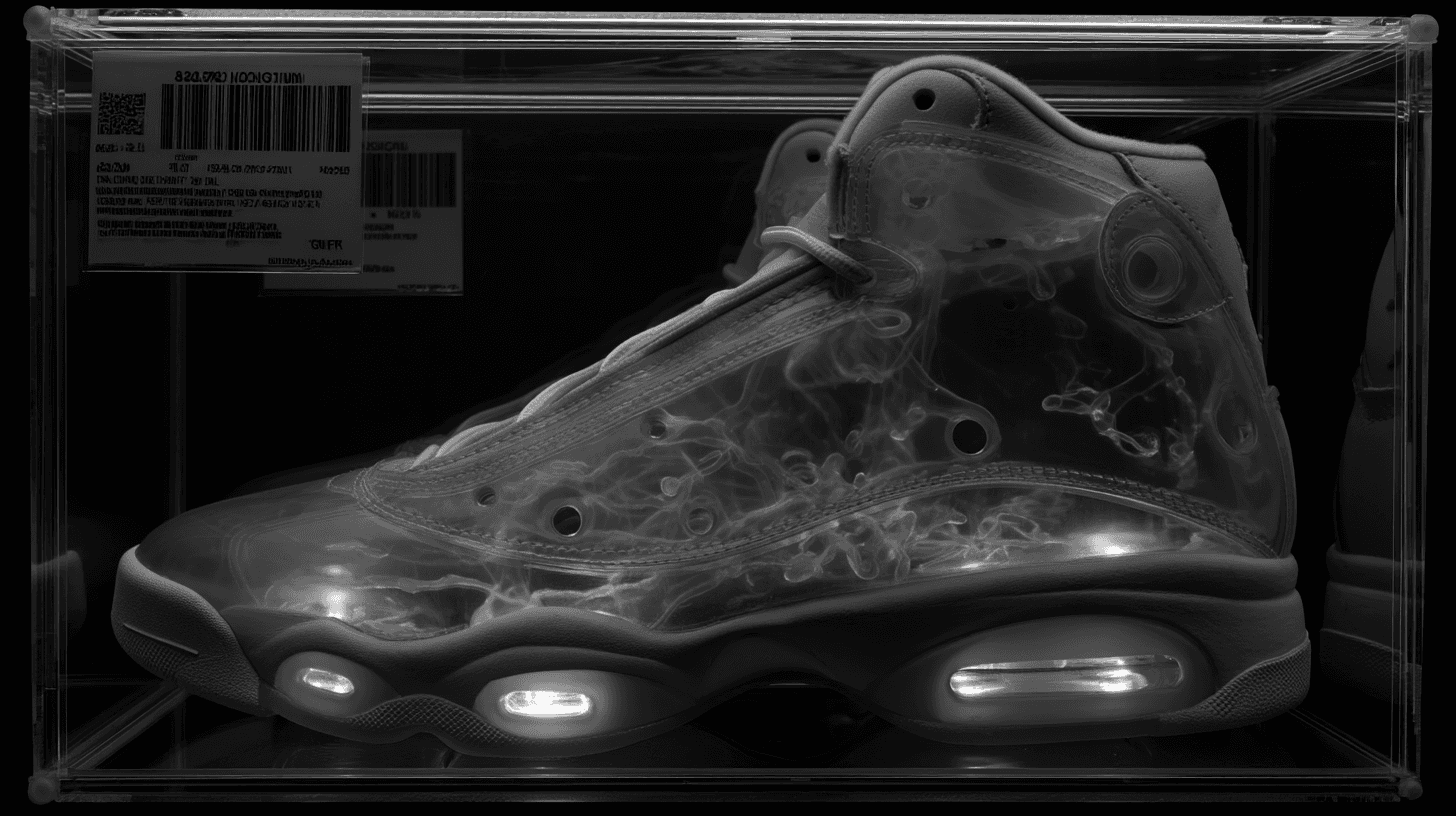

Since text prompts have limitations in terms of literal expression, users can apply image prompts in addition to text prompts to maximize AI inference performance.

Image prompts are the most visually intuitive prompts, but the resolution and structural layout of the reference image used can affect the results. Controlling this requires selectively using source images that are appropriate for the intended purpose and supplementing them with supporting text prompts. Adjusting the weighting of reference images, such as --sw and -iw, can directly and indirectly control the impact of reference images on AI-generated results.

Synthesis of Properties

/ Definition by zake shim

I'll explain Attribute Synthesis, the most important prerequisite for using Midjourney as a creator's tool.

While it's difficult to say for certain that this is how Midjourney officially operates,

it's a concept I'd like to introduce to my readers, so much so that I could say I started writing this article just to define it.

0. Overview

"Synthesis of properties" refers to the process by which various properties within a prompt are separated and combined to form a visual representation.

- The AI interprets the structure, context, and meaning of the words and sentences entered by the user in the prompt.

- The CLIP model extracts visual property information based on the similarity between the text and the image.

- The diffusion model gradually transforms the image from noise to a detailed image.

1. Text Recognition: Natural Language Processing (NLP)

Generative AI first analyzes the text prompt entered by the user through a natural language processing (NLP) model, analyzing the structure, context, and meaning of each word and sentence in the text.

During this process, the NLP model analyzes the following elements:

Word Meaning: It determines what concept or object a word represents. For example, "sunset" is recognized as "해질 녁" or "노을."

Sentence Structure: It understands how sentences are structured and the relationships between words. This involves identifying the position and role of adjectives, nouns, and verbs within a sentence.

For example, in the sentence "a beautiful sunset over the ocean," "beautiful" is interpreted as an adjective modifying "sunset," reinforcing the noun.

Contextual meaning: Inferring more complex meanings through the combination of words.

For example, in the expression "cyberpunk city," the combination of the style "cyberpunk" and the location "city" infers a unique image style.

2. Inference: Translating meaning and attributes between words

Once text analysis is complete,

the CLIP model translates the meanings identified from the words and sentences into attributes for image generation.

Pre-trained data plays a crucial role in this process.

Generative AI is trained on a vast database of image-text pairs, which allows it to learn which visual characteristics specific text expressions are likely to have. For example, the word "mountain" is associated with numerous mountain images it has learned, and it memorizes the general form and characteristics of that word. This is possible.

Based on this, AI infers associated image features (e.g., color, lighting, style, etc.) and converts them into visually expressible concepts (inference).

3. Image Generation: Neural Network-Based Conversion

The AI begins the image generation process based on information extracted from the text prompt.

The core technology used here is a deep learning model, typically a GAN (Generative Adversarial Network), VQ-VAE (Vector Quantization Autoencoder), or CLIP (Contrastive Language-Image Pretraining).

CLIP is a model that learns the association between text and images,

and can evaluate the semantic similarity between text and images.

Through this, it extracts image features that best fit the given text.

The diffusion (image generation) model, based on the image features extracted from CLIP,

generates specific details and styles of the image appropriate for the text prompt.

Midjourney utilizes a combination of the CLIP model and the diffusion model.

4. Attribute Combination and Interaction Understanding

When a prompt contains multiple attributes (e.g., "a futuristic city with neon lights"),

AI understands each attribute separately and combines them to create a single image.

Prioritization between attributes:

For example, in the sentence "futuristic city,"

"futuristic" is applied as an adjective modifying a core word like "city."

If the word "mega" is added between "futuristic" and "city," the AI will interpret the user's prompt as a "futuristic megacity" and infer and generate an image of a futuristic city with larger or more complex details.

Attribute association:

For example, when the expressions "neon lights" and "futuristic city" are used together,

AI understands from training data that "futuristic" and "neon lights" are commonly associated with visual styles, and reflects this in the image.

5. Limitations and Solutions for Attribute Synthesis

The AI may oversimplify the interrelationships between prompt wordings or

may misrepresent specific contexts. Contextual overinterpretation of style can occur.

Users must manually review the inferred image and apply iteratively by bypassing or replacing the words used to ensure the AI generates the image that best suits their purpose.

As prompts become more complex or longer, wording interrelationships and the AI's multimodal inferences change. Therefore, refining (organizing/summarizing/editing) and iteratively applying the prompts until the desired results are achieved.

Since text prompts have limitations in terms of literal expression, users can apply image prompts in addition to text prompts to maximize AI inference performance.

Image prompts are the most visually intuitive prompts, but the resolution and structural layout of the reference image used can affect the results. Controlling this requires selectively using source images that are appropriate for the intended purpose and supplementing them with supporting text prompts. Adjusting the weighting of reference images, such as --sw and -iw, can directly and indirectly control the impact of reference images on AI-generated results.

Next Projects